This is one that I kept coming back to (see Michael Plishka's visceral wonder comment below). It's a Southwestern white pine (Pinus strobiformis) that belongs to Greg Brenden (an earlier smaller iteration in a different pot was shown at the 2010 U.S. National Bonsai Exhibition). The show booklet says the pot is by Ron Lang, but Ron told me that his wife Sharon made it and that he created the rough finish (see below). There were several trees in Lang pots at the exhibition. This photo and the close up below, are borrowed from Bonsai Penjing & More.

Yesterday’s post on judging bonsai provoked some thoughtful comments. Rather than digging myself in any deeper, I’ll let the commenters speak for themselves.

But first, I’d like to reiterate my view that the Artisans Cup was a major breakthrough in American and Western bonsai (here, here and here express this view). My hat if off to Ryan, the artists and everyone else involved, including the six judges, who are all highly accomplished bonsai artists and teachers, and key players in the propagation and development of the art of bonsai.

Colin Lewis’ (and others’ comments) are below the photo.

This close up of the pine above shows off the textures of the tree, the pot, the lichen and the moss.

We’ll start with Colin Lewis who was one of the judges. BTW: I’ve had a long term business relationship with Colin and often rely on his knowledge and judgement:

“As a judge at scores of bonsai events and one honored to join the other judges at the Artisans Cup, I can assure you that all judges agree the rubric used was the most efficient, most equitable and most accurate system of multiple judging possible. Any “intuitive” system you refer to leads to subjectivity, whereas in the points system each exhibit must be assessed independently based entirely on its own merits. The disparity of scores reflects the diversity of the judges, which was intentional in order to have as broad a spectrum of opinion as possible. And it worked, right?”

More comments below the photo.

The other third place finisher. When I (and several others) posted the winners last week, no one knew that there was a tie for third. It's a Japanese white pine that belongs to Konnor Jensen. I borrowed the photo from Michael Hagedorn's Crataegus Bonsai.

Carolyn (no last name given) writes

“It would be like judging between a Picasso and a Rembrandt – Both are superb, so which is better? Personal choice…….” I would go a little further and say that both stand on their own and I’m not sure much is gained by making that choice.

More comments below the photo.

Here's another cascading bonsai that caught my attention. It's a Mountain Hemlock (Tsuga martebsiana) that belongs to Anthony Fajarillo. I borrowed the photo from Scott Lee.

Here’s what Michael Plishka wrote:

“I’ve often wondered about scoring systems and how they may reward doing things “by the book”, but may not reward evoked visceral wonder.

I am reminded of the work by Architect/Mathematician Christopher Alexander, especially, ‘The Nature of Order: An Essay on the Art of Building and the Nature of the Universe, Book 1 – The Phenomenon of Life’.

He believes that there are certain designs that elevate people, make them feel more alive, and they have common traits that he elucidates in his book. He also bemoans the fact that structures showcasing the architectural trends du jour are the ones that get lauded and awarded, even though they are not very uplifting or inspiring…” (continued below the fold)

“Now, having said that, the winning bonsai in competitions are not in any way of the same genre as the non-life-giving architectural pieces Alexander discusses. (In fact, many of the ‘rules’ that Alexander discusses could be seen in these wondrous pieces of living art.) Still, his main point is that the categories used to judge whether something is good or not, may not truly be reflective of what is existentially good and beautiful, but instead reflect what is in vogue (unless one uses his system ;-)).

His point is valid I think, in that it challenges us to examine whether the categories used in judging simply (but with precision) touch on technical ability vs. being categories reflect evoked visceral feelings of beauty, wonder, joy and mystery.

While not scientific study, I have seen award winning trees at shows that are technically perfect (and beautiful!) arouse less interest among visitors than lesser award winning trees that have a je ne sais quoi that makes people stop, take pictures, look from various angles and discuss.

How do *those* types of feelings get captured in a grading system? Perhaps Alexander’s criteria may be able to be adapted for bonsai?

Until that happens, the current system of scoring has great educational value, and it creates a level, relatively objective, though perhaps imperfect, way to judge trees.”

John Kirby writes:

“I think the Judges did a marvelous job. There will always be complaints, concerns, extra analysis. This was an open, thoughtfully conducted and carefully executed process. The fact you know it was a success is that people are still talking about it and there is still buzz. I had a tree in the show, a really good tree, it didn’t place but I have no doubts about the process. Sure we can debate normalization, dropping scores to eliminate outliers, judges using the full range of scores available, questions about can you score a show that will satisfy 100% of the people who see it. I will say this, the trees that won were awesome trees, the judging worked, yet there will never be a perfect system of judging. What I like about this is that the results are open and transparent, subject to Monday morning analysis, this is an unusual and refreshing outcome of the Artisan’s Cup.”

Ann Mudie has this to say:

“Further proof that bonsai is an art form the same as any other, inspiring individual opinions, attitudes and judgements. There is no right or wrong – just personal approaches and views.”

And finally (so far at least) Ceolaf writes:

“I’m having trouble figuring out how to talk about this topic without sounding like a hater. Let me add a little bit here.

The idea that you should drop the high and low scores is based in a fundamental distrust of your judges/scorers. It assumes that fluke scores happen, and should be disregarded.

This is VERY different than assuming that your judges/scorers are experienced and expert. It is very different that allowing them to have different criteria and weighingt on those criteria that can produce different extremes.

The former leads us to believe that the extreme scores do NOT contain valuable information. But if you go through the latter line of reasoning, then there IS valuable information in those scores and it shoudl not be disregarded.

Yes, I am saying that the practice of dropping high and low scores is misapplied when you believe you have expert judges (or well trained judges). It is incredibly disrespectful to the judges. In this case, in which Ryan and Chelsea were so proud of the judges they selected and the procedures they designed, there is no line of reasoning of logic that follows from that to the dropping of high and low scores.

Instead, TAC should have followed another common practice. They should have kept all five scores, and then dropped the high and low to break ties.

Keep posting on this topic (i.e., scoring) and I’ll keep commenting. I study scoring and assessment as a big piece of my professional work and will probably take up every opportunity to try to apply what we actually know from that field to bonsai exhibition scoring.”

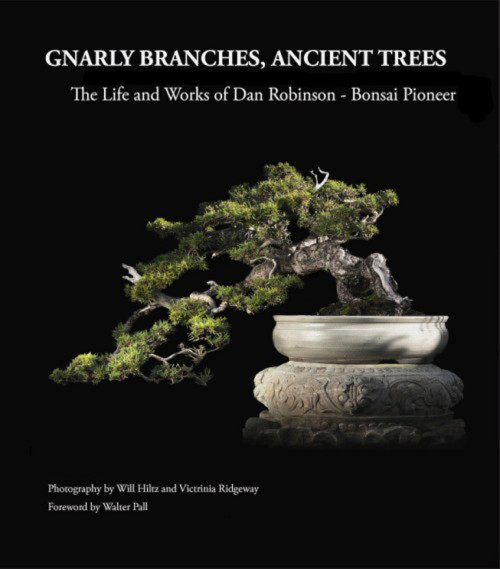

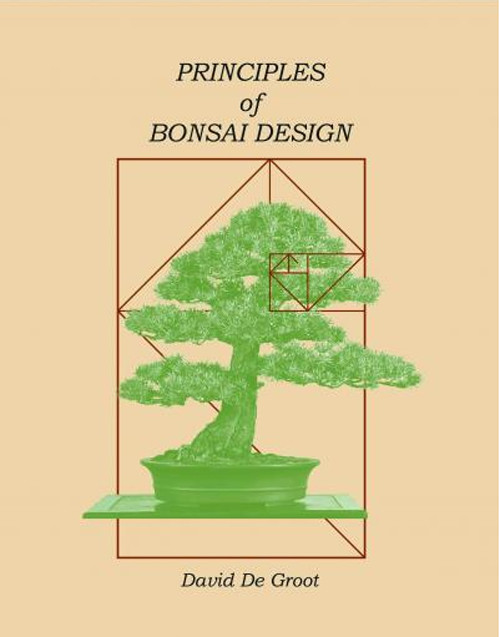

Below are two books. One is by Dave De Groot, one of the Artisan Cup judges, and the other is about Dan Robinson and his bonsai. Dan had two very prominent bonsai at the Cup.

This popular book is now in its second printing. Not too many 50.00 bonsai books are reprinted, but if you know this one, you’ll know why. Available at Stone Lantern.

Here's another great bonsai book that's going to be headed for a second printing if current sales are any indication. Also available at Stone Lantern.

Colin wrote, “whereas in the points system each exhibit must be assessed independently based entirely on its own merits.”

I’m not certain this is QUITE what we want to happen, particularly when judges each get to decide —more or less secretly — for themselves what those merits are.

Boon said at the “Ask the Judges” panel that he essentially used his standard procedure (i.e., something like 10 points for the trunk, 5 points for each of a few (3?) other attributes, and 5 points for artistic impression). We know how Boon weights different criteria. But we do not know how others weigh different criteria, or which criteria they even consider.

I do not think there is a problem with bonsai judges selecting their own criteria or selecting their own weights for each criteria. And I can — at least for now — accept that different expert judges may have quite different views on the quality of a particular aspect of a tree (e.g., about the trunk or about the nebari). And I don’t think have some ineffible undefinable criteria for something like impression, impact or artist something is a problem.

But there is a serious lack of transparency both in each judge’s general approach to scoring and in how each judge saw the various components of the score for each tree.

Except for Boon, it appears that the judges at The Artisans Cup (and, obvously, elsewhere) use holistic scoring, even when they have a points system. This is still entirely subjective and opaque. Yes, use of a points system is an improvement over simply ranking trees, but there’s a long way to go before owners, artists, practitioners and/or enthusiasts can learning anything for the judging of tree at exhibitions.

I am not important person at all but want to contribute to discussion. I think it is (and will always be) difficult to rank art. Art is something that is in the eye of the beholder, it is about the emotion, and about the person. As you cannot put love or happiness in numbers, so I think it is hard to put tree beauty in numbers. Numbered scales are just attempt to make some abstract criteria valued.

I don’t mean to criticise the awards given here, neither if the judges are right or wrong, I just want to stress that event has judging criteria on which judges did their best job and we all should agree with the results.

Trees presented are different from each other in species, style, shape and various other factors so they are incomparable, and it is tough to say why one is better then other and prove it numericaly. Like you want to compare songs from rock’n’roll band and violin quartet.

So, there are criteria, judges said their opinion, all results are visible and that’s it – in this particular configuration we have a winner. Next time trees may be different, criteria may change, judges may be some others, and again exhibition will have a winner. No one should have problem with that.

Alex,

A few things.

1) I agree that it is difficult to judge art. I am not sure of the appropriateness of art on bonsai competitions. But we have to accept that as a given, for the purposes of this discussion.

2) I want to stress that the judges made clear in their “Ask the Judges” session that none of them judged any differently for being given the “rubric.” In fact, they did NOT use the criteria they were given. Rather, they judged the way they would have without the criteria. Furthermore, they explicitly said that they did NOT judge the trees consistently. (Whether it is best to be consistent in criteria is a good or bad idea is not the question. Rather, their instructions told them to be consistent and they were not.)

3) You wrote, “We all should agree with the results.” No, I’m afraid that I cannot sign on to that. For a few reasons.

* Part of what makes judging interesting is that it spurs conversation about agreement or disagreement among the audience and others. Judging can open up further conversation, and there’s no need to view it as shutting down conversation.

* If one believes that there are systemic problems with judging that CAN be addressed for the betterment of bonsai in America generally and that would further the education of those in bonsai at a variety of levels, there is a sort of moral imperative to make that case. High publicity events like the Artisan’s Cup provide a great opportunity to make that case.

4) You raised the issue of comparability. I think that is an important one. I agree, it is too hard to “compare songs from rock’n’roll band and violin quartet.” This is part of why kinds of awards are broken up into categories. In the case of music, we have different events for different genres, and the Grammy’s have 30 categories of music, and 83 awards across the 30 categories.

I would suggest that if trees cannot be judged by the same criteria, they clearly belong in different categories. Otherwise, attempts to align the different categories add another level of arbitrariness.

********************************

You are correct to point out that as an art form, we will never be able to nail down a meaningful objective score for a bonsai. No doubt. But we can do a lot to make the meaning of each score more transparent and to set some limits around some of the more arbitrary aspects of judging/scoring. Of course, among high integrity judges there will always be some amount of arbitrariness and personal subjective judgment — though of course not capriciousness nor ill-intent — but that does not have to prevent us from applying the lessons of scoring in other fields to bonsai.

ceolaf, I did not have any intention to oppose your opinion. English is not my native so I apologize if some wrong tone raised out of my post. I agree that the final judging result should not stop the conversation afterward.

What I say that judging is not easy. In soccer, the referee may (by his best efforts, but not clear vision) blow his whistle for a penalty kick and decide about victory of one team, which then becomes official 1:0, even the discussion after that is allowed.

In bonsai there is no universal and ultimate justice, and judges are part of the game. Hard to say that their work can be better or worse, as you cannot compare their given results with some ideal and universal score or normative.

So for me, the best judging system is kind of both professional and amateur personal experience. Kind of voting system, not the points for the trunk and points for the crown…You like the tree or you don’t, you feel it or not, tree brings emotion and attention or not. This is what usually drops as single category as “public votes (likeness)” which is usually different then professional judging where nebari and branch curve can be (or should be by the rules?) expressed numerically. I would like that numeric values decide the ties in “public vote” results.

And one thing you get right, categories are the solution. Otherwise, on the Olympics, 100m racers would compete with javelin throwers.

One thing about the tallying of the scores that bothered me, and seems unnecessarily problematic, was the whole tie breaker situation. Many folks were buzzing about how it was done and whether it was fairest way to do it. Really, the whole problem could have been avoided–check this out: If you review the scoring, which was five judges scores with the lowest and the top scored thrown out , you can see you have three scores left so they added them together then divided by three and then did conventional rounding to determine a whole number score. So, although there was two entries scoring 51 using the rounded tally sheet system, If you computed the scores using un-rounded math, and included the decimal, the scores were Entry#32=51.33 and entry#52=51.0. The next slot was Entry#4=49.66 and entry# 27 =50.33. So I would think it would be easier and more fair to have just deferred to the math, which would have been awarded as 1st place to entry#32, 2nd place to entry# 52, and third place to entry# 27–Am I doing something wrong or am a genius?

Just a quick response to Ceolaf:

You wrote: “In this case, in which Ryan and Chelsea were so proud of the judges they selected and the procedures they designed, there is no line of reasoning of logic that follows from that to the dropping of high and low scores.”

I would argue that there is one line of reasoning that I believe made this a very logical decision. — The bonsai community is very small. At least several of these judges have worked personally on several of the trees. This doesn’t mean that they can’t think critically or successfully judge the tree anyway. But there stands the possibility that they may favor that tree, or that they may judge it even more critically than if they hadn’t worked on it. The fact that high and low scores were dropped, saves them from both themselves, and from others scrutiny in the case that an incredibly high or low score had bumped a tree into either winning or losing.

I noticed that one of Boon’s highest scores (maybe his highest) was given to John’s tree; who works very closely with Boon, and very likely on this tree. I’m not at all saying that it was unfair, but if that score had caused John’s tree to win, then there would have been no end to people accusing Boon of favoritism. As it was, the practice of discarding high and low scores saved that from happening.

I cast absolutely no judgment on Boon’s scoring. I’m only using this as an example of the type of thing that could have happened if all 5 score were used for each tree.

Oops! I just reviewed the scores for John’s tree again, and it appears that Boon was actually the lowest score on that particular tree. So, my example scenario above is exactly opposite of what actually happened. Which offers the possibility that Boon was more critical of that tree because he may have worked on it… Either way, the system worked. :)

Dan,

I’m sorry, but you have a logical leap there.

You suggest that judges scores for trees on which they have worked are not trustworthy. OK. Let’s go with THAT line of reasoning.

That would tell us the judges should acknowledge such situations so that they can skip scoring those trees. Ta-dah! Problem solved!

But dropping high and scores does NOT solve that problem. It only addresses the situation when the judge has worked on the tree AND has given it either the highest or lowest score. So, it’s actually NOT a good way to keep judges from scoring trees they have worked on in the past.

Furthermore, your line of reasoning does NOT lead us to drop the high and low score for EVERY tree.

So, as I wrote earlier, “there is no line of reasoning of logic that follows from that to the dropping of high and low scores.”

Dan,

Let me explain why your proposed line of reasoning (i.e., drop a judges score if s/he has previously worked on that tree and the score is the high or low score) mathematically falls apart.

Imagine 5 judges (A, B, C, D & E). Imagine that they A is the toughest judge and E the easiest judge.

The relative harshness of each judge doesn’t matter because if every judge scores every tree then every tree gets the same mix of harshness. Sure. A was tough on tree #18, but s/he was equally hard on all the other tree. (This is why you either need well calibrated judges or every judge to score each and every tree.)

So, E is the easiest judge. And E knows which trees s/he has worked on in the past. And E knows that s/he has a tendency to score trees higher. E — being a high integrity person — make SURE that s/he does not go easy on tree s/he has worked on, and ends up overcompensating. Thus, in an admirable fit of integrity, s/he ends of being kinda in the middle in terms of harshness for the trees s/he has worked on. E goes from usually being the high score to NOT being the high score on trees s/he has worked on.

The same story works for A, who might end up being LESS likely to be low score on trees s/he has worked on.

What happens is the 40% of the judges (i.e., the easiest and harshest judges) can actually have their scores dropped LESS often when judging trees they have worked on. All it takes is for judges to have different difficulties — as they always do — and efforts on their parts to compensate for their biases.

OR…..

Judge E does as you suggest, and is just naturally/unconsciously harder to trees s/he has worked on, and Judge A is just naturally/unconsciously easier on trees s/he has worked on.

You see, your objection (i.e., judges scoring of trees they themselves have worked on might not be as trustworthy) is not actually covered by dropping the high and low scores.

It is much simpler to take your objection head-on and tell judges not to score tree they have worked on. So long as you trust the integrity of your judges, you can avoid the unconscious bias you suggest (or the overcompensation the I suggest).